Xinlei Yu (Leo)

Xinlei (Leo) Yu is a first year PhD student at the University of Southern California, advised by Dr. Heather Culbertson. Before this, Xinlei worked for Google’s Android XR. Xinlei’s research interests center on the intersection of HCI and Robotics, specifically focusing on haptics, aerial and humanoid robots, and robot perception.

I want to extend my heartfelt thanks to the many brilliant colleagues and mentors I’ve had the pleasure of working with. Their support and guidance—from insightful conversations that sparked new ideas, to their steady direction whenever I felt lost—have been instrumental in my journey, and for that, I am sincerely grateful.

I am open and happy to discuss new research projects, innovative ideas, and potential collaborations. Please feel free to contact me at xinleiyu@usc.edu

On-going Project

| Working on end-to-end wearable navigation with autonomous ungrounded robots that guide users within indoor environments via haptic feedback: |

And More

Publication

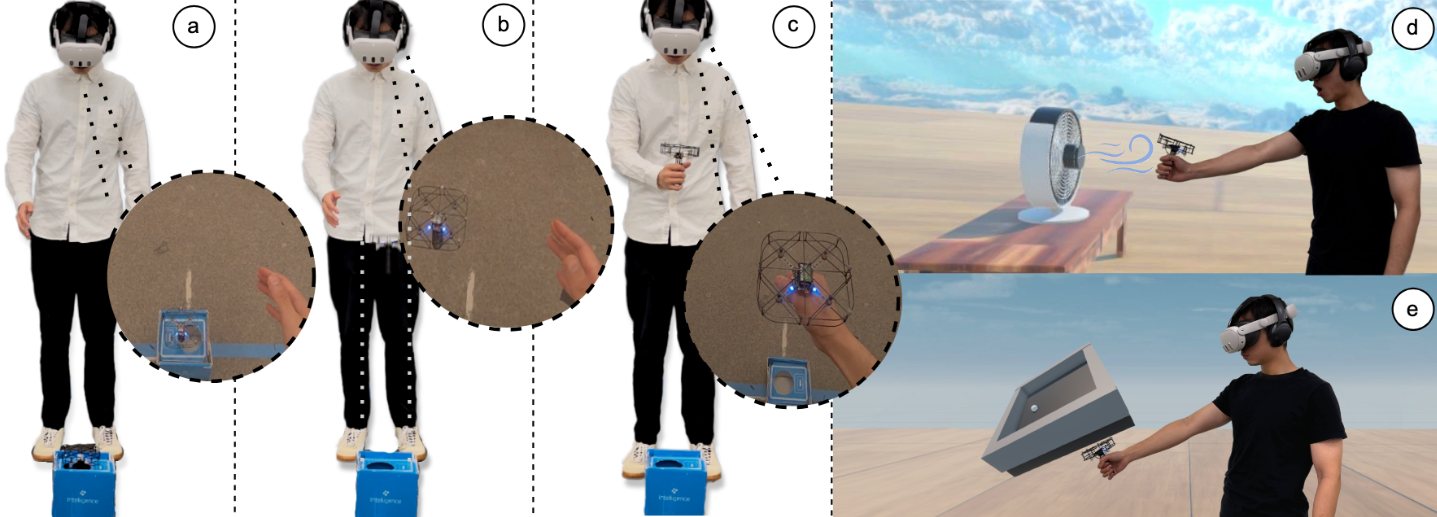

| Propeller-based Handheld Forcefeedback Device for Navigation Submitted to IEEE Transaction of Haptics (Under Review) Xinlei Yu, Yang Chen, and Heather Culbertson |

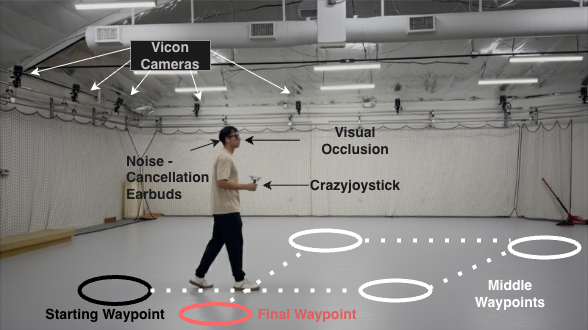

| CrazyJoystick: A Handheld Flyable Joystick for Providing On-Demand Haptic Feedback in Virtual Reality IEEE World Haptics Conference 2025 [video] Yang Chen*, Xinlei Yu*, and Heather Culbertson |

Fun Project

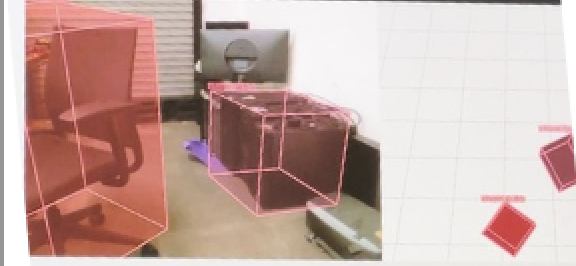

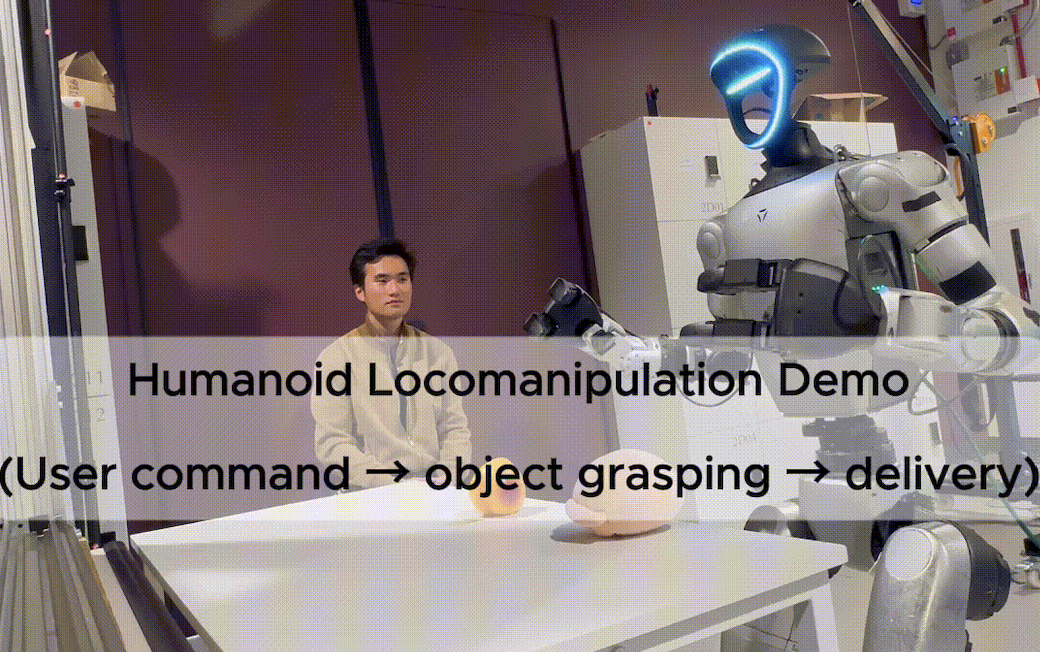

| Semantic 3D Perception and VLM-based Planning for Humanoid Loco-Manipulation [Demo Video Alternative] |

| | Real-Time UAV Teleoperation via an Immersive VR Interface [Demo Video] [System Diagram] |

| VR Dressing Room [Demo Video] |