Presentation and Demo

|

Exploring Electrotactile Stimulation as a Modality for Sensation Illusion on the Arm: Presented at the recent SCR’23 Xinlei Yu, Xin Zhu, Xiaopan Zhang and Heather Culbertson [abstract] |

|

Tummy Time Toy: An Infant Learning Toy Demo at NSF DARE’23 Xinlei Yu, Arya Salgaonkar, Stacey Dusing and Francisco Valero-Cuevas [Demo Video] [Pilot Study Video] |

Current Research Project

|

I’m working on a haptic project that investigates the human perception and emotional state to electro-tactile stimulation. The further goal is to build a personalized and pleasant electro-tactile device that works seamlessly with other stimulus modalities such as visual and auditory. |

|

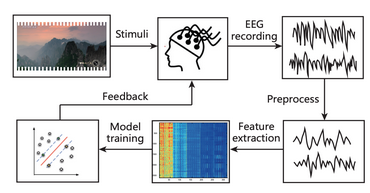

Currently, I’m working on a project that investigates enhancing EEG-based emotion recognition by applying Kalman filtering and smoothing techniques to EEG data from the public SEED dataset. The ultimate goal is to improve the evaluation of perceptual properties and human-computer interaction by enabling more precise quantification of human emotional states through EEG signal processing. |

Past Research Project

|

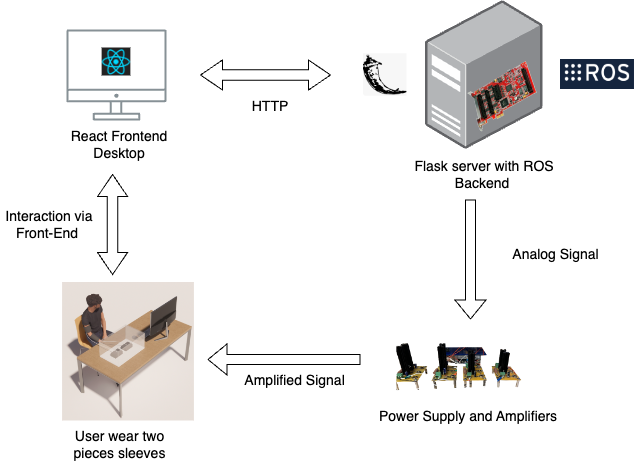

Multi-armed bandit-based calibration for Electro-tactile Simulation: Developed an electro-tactile display with a Sensory PCI card and a group of power sources and amplifiers and designed a multi-armed bandit-based calibration method to find an optimal signal parameter for pleasant stimulation. [Github(partially available)] |

|

Tummy Time Toy: A computer vision-based infant motor learning assistant toy (under US Patent review). This interactive toy rewards infants with lights and music when they lift their heads past a certain threshold, encouraging the development of prone motor skills. The primary goal is to study whether babies can learn to control their bodies during tummy time with the toy’s assistance, aiding in muscle control and increasing their tolerance for tummy time. [video] [Github(available soon)] |

|

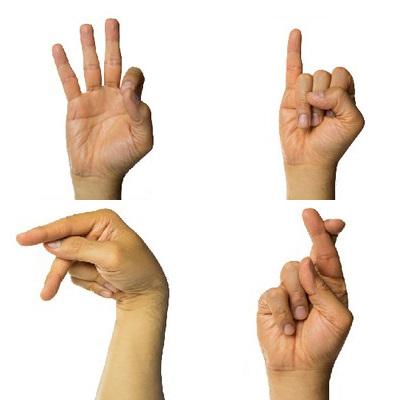

American Sign Language: we present an alphabet translator for American Sign Language (ASL), deploying Convolutional Neural Networks (CNN) and Residual Neural Networks (ResNet) to classify RGB images of ASL alphabet hand gestures. We meticulously tuned hyperparameters to ensure high training accuracy and solid test performance. [paper] [Github] |

Past Selected Project

|

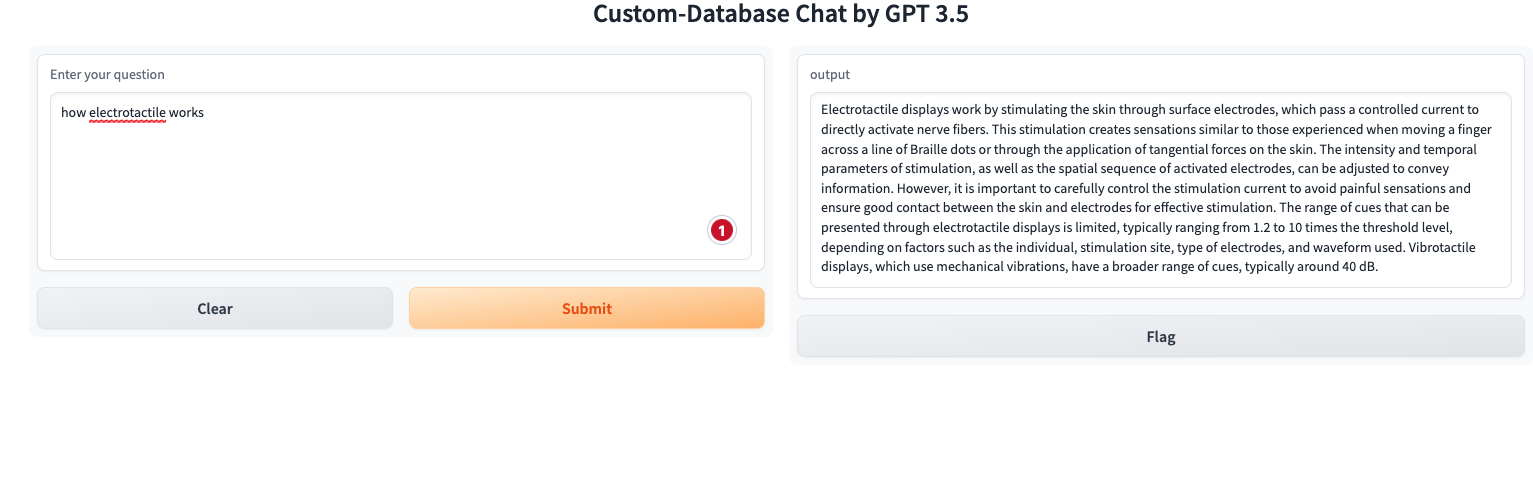

Chatgpt-based chatbot with Haptics Knowledge base: A GPT-based chatbot with a custom haptic research database using LangChain(call embedding model), LlamaIndex(vector indexing), OpenAI APIs, and gradio(lightweight UI). Provided researchers with a chatbot with the latest and custom database in the research domain and the power of chatGPT. [GitHub] |

|

Artsy App: An Android and Web application for users to search for artists from Artsy’s database, look at detailed information about them(artwork image, bio, description, etc.) [GitHub] |

|

Cleaning Robot: Developed an iRobot Create cleaning robot using C with TI Launchpad that can autonomously navigate through a pipe maze and reach the designated destination, while mapping obstacles it encounters to a Graphic User Interface (Putty). Designed a decision-making algorithm for choosing a moving direction based on calibrated radar and ultrasonic sensor input. |